Current project

Academy professor project (2021.09-2026.08):

Towards vision-based emotion AI (Emotion AI)

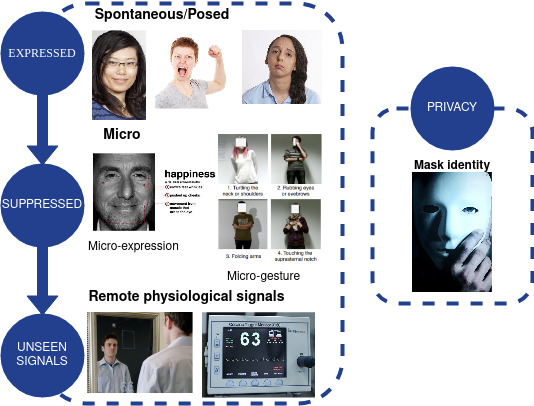

The Emotion AI project aims to deeply investigate novel computer vision and machine learning methodology to study how artificial intelligence (AI) can identify human emotions and bring emotion AI to human-computer interaction and

computer mediated human-human interaction for boosting remote communication and collaboration. In addition to expressed visual cues, AI technology is expected to identify suppressed and unseen emotional signals, and at the same time

mask people’s identity information for protecting privacy. We will work with worldwide leading experts from different disciplines, e.g., psychology, cognition sciences, education, and medicine, as well as industry, to advance the

emotional intelligence of AI-based solutions and improve understanding of the significance of emotions in the context of human-computer interactions. The research knowledge generated can accelerate innovations for e.g., real-world

e-teaching, e-service, health and security applications.

https://www.oulu.fi/blogs/science-with-arctic-attitude/emotion-ai

How EmotionAI is organised

Work packages

WP1: Expressed cues (posed or spontaneous facial & bodily expressions)

Aims: Acquisition of reliable new dataset; Dynamic descriptors; Temporal modeling; Context analysis.

People: Hanlin Mo, Qianru Xu

WP2: Suppressed cues (micro expressions & gestures)

Aims: Acquisition of reliable new dataset; Dynamic descriptors; Temporal modeling; Context analysis; Subtle motion detection and magnification

People: Yante Li, Haoyu Chen

WP3: Unseen (physiological signal estimation & analysis)

Aims: Acquisition of reliable new dataset; Temporal modeling; Context analysis; Subtle motion detection and magnification

People: Marko Savic

WP4: Association of different cues

Aims: Acquisition of reliable new dataset; Context analysis; Multi-model learning

People: All

WP5: Masking identity

Aims: Acquisition of reliable new dataset; Emotion cue transfer

People: Kevin Ho Man Cheng, Marko Savic, Haoyu Chen

WP6: Experimental validation

Aims: Acquisition of reliable new dataset; Context analysis; Multi-model learning; Emotion cue transfer

People: All

Project

Publications

You can also find all the other publications from our unit by going to our PI's personal site or her UniOulu page

| Article | Year |

|---|---|

|

From Emotion AI to Cognitive AI

G Zhao, Y Li , and Q Xu International Journal of Network Dynamics and Intelligence (IJNDI) |

2022 |

|

Benchmarking 3D Face De-identification with Preserving Facial Attributes

K H M Cheng, Z Yu, H Chen, G Zhao IEEE International Conference on Image Processing, ICIP 2022 |

2022 |

|

Deep Learning for Micro-expression Recognition: A Survey

Y Li, J Wei, Y Liu, J Kauttonen, G Zhao IEEE Transactions on Affective Computing, Vol. 13, NO. 4 |

2022 |

|

Geometry-Contrastive Transformer for Generalized 3D Pose Transfer

H Chen, H Tang, Z Yu,N Sebe, G Zhao Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), 2022 |

2022 |

|

AniFormer: Data-driven 3D Animation with Transformer

H Chen, H Tang, N Sebe, G Zhao Proceedings of the British Machine Vision Conference, 2021 https://github.com/mikecheninoulu/AniFormer |

2021 |